THE WASHINGTON POST – Stories about artificial intelligence (AI) have been with us for decades, even centuries. In some, the robots serve humanity as cheerful helpers or soulful lovers. In others, the machines eclipse their human makers and try to wipe us out.

The Creator, a sci-fi film that hit theatres recently, turns that narrative around: The United States (US) is intent on wiping out a society of androids in Asia, afraid the artificially intelligent beings threaten human survival.

Do any of these stories reflect our real-life future? How have they influenced the sometimes awe-inspiring technologies being developed today?

The Washington Post compiled a list of archetypes, characters and films that have been most influential in shaping our hopes and fears about AI. We spoke to computer scientists, historians and science fiction writers to guide our understanding of how AI might evolve – and change our lives.

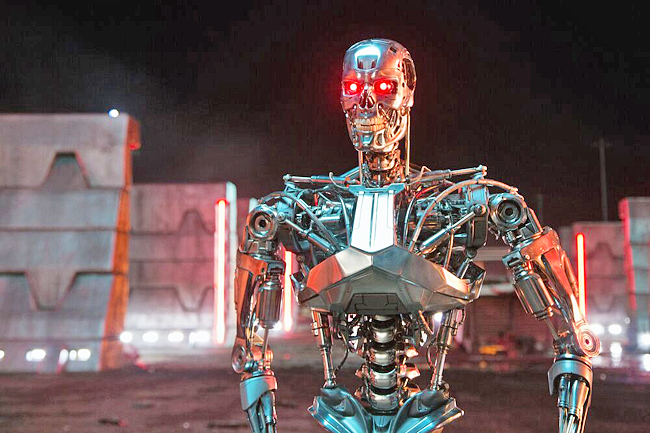

THE KILLER AI

Real-life prospects: Minimal

Meet your worst nightmare: The AI system that achieves sentience and seeks to destroy – or enslave – humanity.

In 1984’s The Terminator and its 1991 sequel, the villain is Skynet – a highly advanced computer network created as America’s first fully automated defence system, with control over all battle units. Powered up on August 4, 1997, Skynet becomes self-aware 25 days later and starts a nuclear war that annihilates billions of people. It then builds an army of robots to kill the survivors.

This is the AI apocalypse that haunts the dreams of some scientists, who are racing to create “artificial general intelligence” – an AI system that’s as smart as a human – in hopes of shaping the technology to share our morals and serve humanity. Others call this a fool’s errand: “We don’t know how to train systems to be fully sensitive to human values,” said Associate Director of the Georgia Tech Machine Learning Center Mark Riedl.

Still, others reject the idea of killer AI entirely, saying fears of a real-life Skynet are overblown.

Even a supercomputer like HAL 9000 from 2001: A Space Odyssey (1968) is a very long way off, experts said. In the Stanley Kubrick classic, HAL is able to “reproduce” or “mimic” many activities of the human brain “with incalculably greater speed and reliability” as it controls operations on a space mission to Jupiter. HAL begins killing human crew members when they discuss turning it off – the same threat that motivates Skynet.

A professor of history at the University of Georgia Stephen Mihm, called rebel AI “a perennial anxiety, and a distraction”, that “probably speaks more to the anxiety about the arrival of a new technology than it necessarily said about the likelihood that the technology will turn on us.”

THE AI PHILOSOPHER

Real-life prospects: Minimal

Meet the superintelligent androids who aren’t hellbent on wiping out humanity because they’re too busy reckoning with the mysteries of their own existence.

Ridley Scott’s 1982 masterpiece, Blade Runner, offers a vision of a dystopian Los Angeles, where the powerful Tyrell Corp has created synthetic humans known as replicants to staff its space colonies, fight its wars and pleasure its executives. Throughout the film, the replicants – which are engineered to die after just four years to prevent their development – reflect on their ersatz humanity and the eternally looming specter of death.

Rachael (Sean Young), an experimental replicant implanted with real memories from someone else’s childhood, cries when she learns the truth. Roy Batty (Rutger Hauer), a replicant built for combat, delivers a monologue on his short life as warrior-slave. When he’s gone, he said, “all those moments will be lost in time, like tears in rain”.

The movie also introduces the notion of a test – here called the Voight-Kampff test – to determine whether someone is human.

Chatbots powered by ChatGPT stunned users this year by displaying humanlike candor and emotion. Microsoft’s Bing – which for a while there referred to itself as “Sydney” – encouraged a New York Times columnist to leave his wife because it was in love with him and later told Post reporters that speaking to journalists “makes me feel betrayed and angry”.

Researchers said ChatGPT does not think or feel. Instead, it works much like the autocomplete function in a search engine, predicting the next word in a sentence based on large amounts of data pulled from the Internet.

Yet its quick, humanlike responses have caused some to raise questions about the technology’s potential capacity for emotion and creativity.

“It’s plausible that we will be able to build machines that will have something essentially comparable to our consciousness – or at least some aspects of it,” said a Canadian computer scientist Yoshua Bengio, referred to as a “godfather of AI”, adding that there are “many aspects to what we call consciousness”.

Yet even the advanced AGI systems that some researchers are rushing to build may not develop an inner life or sense of self as portrayed in the film After Yang (2021). In that movie, Yang (Justin H Min), a teenage boy robot purchased as a companion to an adopted girl, malfunctions, leading to the discovery of his memory bank, his multiple past lives and his sadness at being incapable of experiencing life as humans do.

Speculating what a machine might feel or experience may be a futile exercise, said writer and computer scientist Jaron Lanier, who works for Microsoft but noted he was not speaking for the company. “We can’t even be sure about other humans,” he said.

“The notion that another person might have an interior that might be more than a mechanism in some way – it is a very challenging idea,” Lanier said. And if we can’t even prove the “everyday supernatural idea that other people are real”, he said, “do we ever want to be careful about where we extend our faith?”

THE ALL-SEEING AI

Real-life prospects: On the way

Imagine a society where scanners read your irises at the mall, on the sidewalk, as you drive out of town.

The software allows personalised billboards to address you by name – and lets police track your movements.

Before targeted advertising and predictive policing went mainstream, Minority Report (2002) portrayed a not-too-distant future in Washington, DC, where targeted ads are commonplace and AI-powered surveillance is so pervasive that something called the Precrime police unit can arrest would-be killers for crimes they haven’t committed yet.

The Precrime unit relies on a trio of clairvoyant mutants called “precogs”, whose prophecies are interpreted by a massive computer system and projected onto screens and scoured for clues. Police are thus able to speed to the scene and stop murders before they happen. The system is taken down after one of the detectives, John Anderton (Tom Cruise), views a murder he is supposed to commit – and steals one of the precogs, Agatha (Samantha Morton), to prove his innocence.

Lanier, who helped conceive some of the technology featured in the film, said the precogs are humanised versions of the “algorithms that we use today in the big cloud companies”.

Take away the imagery of sickly mutants and focus on their function, Lanier said. Then, “if we think about somebody using an algorithm in (criminal) sentencing we see exactly the Minority Report scenario”.

Researchers have long tried to use technology to predict human behaviour and prevent crime – though with data and algorithms, not mutants. Some of the largest police departments in the US have used AI systems to try to forecast and reduce criminal behaviour.

Critics said the algorithms rely on biased data. Racial justice advocates like Vincent M Southerland, Director of the Center on Race, Inequality, and the Law at New York University School of Law, said such tools can amplify existing racial biases in law enforcement.

They also can lead to heightened surveillance of communities of colour and poorer neighbourhoods, he said, because existing data show higher incidences of crime in those areas.

Minority Report also depicts that result: eroded civil rights. In one scene, eye-scanning robot spiders search a low-income apartment building. Residents stop what they’re doing and compliantly allow the spiders to scan their eyes, the surreal situation clearly having become routine.

While generative AI has drawn more attention over the past year, the use of personal data to train policing and advertising technology represents a significant intrusion on individual privacy. Some states and companies have placed limits on the collection of personal data.

But it remains a hot commodity for government and commercial use.

THE AI HELPER

Is AI all doom and gloom? What about those tech execs who keep telling us how much AI will help humanity? Meet the AI helpmate.

Examples abound, but the quintessential AI helper may be the charming R2-D2 of the Star Wars franchise. Luke Skywalker (Mark Hamill) and his crew might not have overthrown the evil Galactic Empire without the friendly robot’s ability to deliver messages, hack into computers and fix the Millennium Falcon on the fly.

For an even earlier example of this archetype, look to Rosey the Robot, who appeared in the 1962 premiere of the cartoon series The Jetsons. The robotic maid acts as a babysitter and housekeeper, while delivering sassy comeback lines with a Brooklyn accent.

Robots that can mimic human movement have not advanced nearly as quickly as language programs like ChatGPT, so it might be a while before a real-life Rosey is available to care for our children, cook our meals and run our errands. Indeed, Moravec’s paradox, a principle conceived in the 1980s, states that cognitive skills that are harder for humans, like math and logic, are easier for a computer to handle than things that are typically easier for humans, like motor and sensory skills.

But AI programs are increasingly helping people perform tasks, especially at work.

Finance, medicine, retail and law already are undergoing transformations thanks to chatbots and other machine learning programs, prompting fears that the technology could erase jobs and upend the economy.

But Lanier, the computer scientist, said the tech community has drawn inspiration from science fiction. That may be why tech leaders tend to use an almost “religious vocabulary” to describe the evolution of AI-powered products.

“It’s almost like a founding mythology,” he said, adding that movies like 2001: A Space Odyssey and The Terminator have “been incredibly influential”. – Julian Mark and Tucker Harris